This tutorial will show you how to compute the radar cross section (RCS) for a dielectric sphere by sampling scattered near fields on a closed surface surrounding the sphere, and transforming them to observation points far away.

This example demonstrates the usefulness of the near field to far field transformation for reducing the simulation size needed for structures involving lots of empty space.

To obtain the scattered field, we will use a total-field/scattered-field source (TFSF), and measure the scattered fields in the region surrounding the sphere. For a more detailed demonstration on TFSF, please refer to the tutorial.

Then, we'll show how to use a near-field to far-field transformation in Tidy3D to compute the RCS for the sphere either on the cloud during the simulation run, or on your local machine afterwards.

If you are new to the finite-difference time-domain (FDTD) method, we highly recommend going through our FDTD101 tutorials.

# standard python imports

import numpy as np

import matplotlib.pyplot as plt

# tidy3d imports

import tidy3d as td

import tidy3d.web as web

Define Simulation Parameters¶

We first need to define our simulation parameters and the structure.

# radius and location of the sphere

radius = 0.5

center = [0, 0, 0]

# permittivity of the sphere

epsr = 4

# free space central wavelength

wavelength = (2.0 * radius) / 2.0

f0 = td.C_0 / wavelength

# distance between the surface of the sphere and the start of the PML layers along each cartesian direction

buffer_PML = 3 * wavelength

# distance between the sphere and the near field monitor along each cartesian direction

buffer_mon = 1 * wavelength

# distance between the sphere and the TFSF source region along each cartesian direction

buffer_tfsf = 0.5 * wavelength

# Define material properties

air = td.Medium(permittivity=1)

diel = td.Medium(permittivity=epsr)

# resolution control

min_steps_per_wvl = 20

# create the sphere

sphere = td.Structure(geometry=td.Sphere(center=center, radius=radius), medium=diel)

geometry = [sphere]

# define PML layers on all sides

boundary_spec = td.BoundarySpec.all_sides(boundary=td.PML())

# set the domain size in x, y, and z

domain_size = buffer_PML + 2 * radius + buffer_PML

# construct simulation size array

sim_size = (domain_size, domain_size, domain_size)

Create Source¶

For our incident field, we create a total-field scattered-field region injecting a plane wave propagating in the z direction, from below the sphere, polarized in the x direction.

# Bandwidth in Hz

fwidth = f0 / 10.0

# time dependence of source

gaussian = td.GaussianPulse(freq0=f0, fwidth=fwidth)

# place the total-field region around the sphere, injecting a plane wave from the bottom in the +z direction

source_size = [2 * radius + 2 * buffer_tfsf] * 3

source = td.TFSF(

center=center,

size=source_size,

source_time=gaussian,

direction="+",

pol_angle=0,

injection_axis=2,

)

# Simulation run time (s)

run_time = 2e-12

Create Monitors¶

Next, we define the monitors that will capture the near field data.

First, we create a FieldMonitor completely enclosing the sphere, using the .surfaces() method to extract the 6 planar surfaces surrounding the volume. This cuts down on the data required vs computing the full volume because only the fields on the enclosing surface are required to get the far field information.

The near fields will be captured on these surfaces; after the simulation, they will be used to compute far fields on your local machine.

We will also turn off server-side field colocation and store the near fields directly on the Yee grid. The fields are colocated during the field projection computation as needed.

# create a set of surface monitors around the sphere for local computation of far fields

mon_size = 2 * radius + 2 * buffer_mon

monitors_near = td.FieldMonitor.surfaces(

center=center, size=[mon_size] * 3, freqs=[f0], name="near_field", colocate=False

)

Next, we'll make a FieldProjectionAngleMonitor which is used for computing far-fields directly on the server during the simulation run with Tidy3D's full hardware optimization, making it extremely fast. Note that in this case, surfaces() must not be used because the solver already knows to use only surface tangential fields to compute the far fields.

With this approach, the near-field data associated with the monitor is used to compute the far fields, and only the far field data is downloaded and returned to the user. Then, we'll show how to easily and quickly retrieve various quantities such as power and radar cross section from the computed far fields.

Note that for server-side calculation of far fields, this is the only monitor required. The monitor's size and center fields specify the location where near fields will be sampled, while its theta and phi fields specify the far-field observation angles. Therefore, all the information needed is contained within FieldProjectionAngleMonitor (one can also use FieldProjectionCartesianMonitor or FieldProjectionKSpaceMonitor to specify the far-field observation grid in different coordinate systems).

# set the far-field observation angles of interest

num_theta = 300

num_phi = 2

thetas = np.linspace(0, np.pi, num_theta)

phis = np.linspace(0, np.pi / 2, num_phi)

# create the far field monitor for server-side computation of far fields

monitor_far = td.FieldProjectionAngleMonitor(

center=center,

size=[mon_size, mon_size, mon_size],

freqs=[f0],

name="far_field",

custom_origin=center,

phi=list(phis),

theta=list(thetas),

far_field_approx=True, # we leave this to its default value of 'True' because we are interested in fields sufficiently

# far away that the far field approximations can be invoked to speed up the calculation

)

Let's also create another near-field monitor where the fields are automatically downsampled based on given sampling rates. The rationale is that we may be able to approximate the far fields fairly well even if the resolution of near-fields is not very fine. This downsampled is achieved by supplying the interval_space field in the FieldMonitor, and can be useful for further reducing the amount of data that needs to be downloaded from the server, while still leading to accurate far fields, as shown below.

Here, we downsample by a factor of 2 along the x and y dimensions, and a factor of 3 along z.

Note that the downsampling feature can be useful if downloading near fields to compute far fields on your local machine, but is unnecessary if you choose to use server-side far field computations.

Like before, we also turn off the server-side field colocation.

# create a set of surface monitors around the sphere

monitors_downsampled = td.FieldMonitor.surfaces(

center=center,

size=[mon_size, mon_size, mon_size],

freqs=[f0],

name="near_field_downsampled",

colocate=False,

interval_space=(

2,

2,

3,

), # these are the (x, y, z) factors by which fields are downsampled

)

Create Simulation¶

Now we can put everything together and define the simulation object.

Note that for best results, it is recommended that the FDTD grid is made uniform inside the TFSF box along the directions perpendicular to the injection axis. So, we'll add a mesh override region slightly larger than the TFSF box itself, whose mesh size is set based on the permittivity of the sphere.

# create the phantom override structure for the mesh based on the TFSF source size

mesh_override = td.Structure(

geometry=td.Box(center=source.center, size=[i * 1.1 for i in source.size]),

medium=diel,

)

grid_spec = td.GridSpec.auto(

min_steps_per_wvl=min_steps_per_wvl, override_structures=[mesh_override]

)

# collect together all monitors

monitors = monitors_near + [monitor_far] + monitors_downsampled

# create the simulation object

sim = td.Simulation(

size=sim_size,

grid_spec=grid_spec,

structures=geometry,

sources=[source],

monitors=monitors,

run_time=run_time,

boundary_spec=boundary_spec,

)

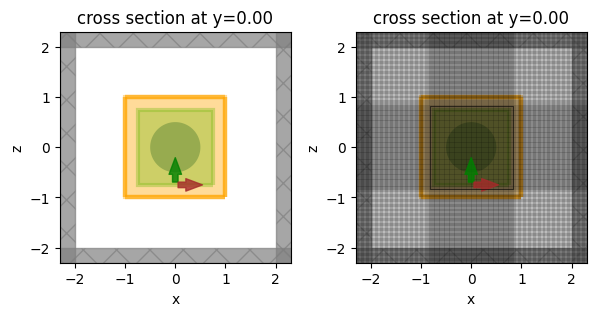

Visualize Geometry¶

Let's take a look and make sure everything is defined properly.

fig, ax = plt.subplots(1, 2, figsize=(7, 3))

sim.plot(y=0, ax=ax[0])

sim.plot(y=0, ax=ax[1])

sim.plot_grid(y=0, ax=ax[1])

plt.show()

Run Simulations¶

Now we can run the simulation over time and measure the results

sim_data = web.run(sim, task_name="sphereRCS", path="data/sphereRCS.hdf5", verbose=True)

View task using web UI at webapi.py:190 'https://tidy3d.simulation.cloud/workbench?taskId=fdve- 2f80db90-6695-4b3b-a074-cd037ae0e318v1'.

Setting Up the Local Near2Far Computation¶

To set up the near-to-far transformation locally, we need to grab the fields on each surface of the near-field FieldMonitor objects.

So, we simply create a FieldProjector object and pass in the surface monitors as shown below. Note that we also need to pass the normal directions of each of the monitors in the list.

In addition to storing the near field data, this object will compute the surface currents and provide various methods for projecting the far field quantities.

We can optionally pass in the number of points per wavelength in the background medium with which to sample fields on the monitors. The default is 10 points per wavelength. This can reduce computation times significantly. By default, 10 points per wavelength are used.

One can also pass in coordinates for the local origin of the set of monitors; the far-field observation points will be defined with respect to this origin. By default, the local origin is set to the average of the centers of all surface monitors passed in.

To see the usefulness of downsampling the fields recorded on monitors, we'll also run the near-to-far transformation with downsampled fields to compare the RCS.

Finally, to get a sense of performance, we'll measure the time it takes to compute the far fields locally, and compare it to the time it took to compute them on the server.

import time

# first, we construct the classes which compute far fields locally on your machine

n2f = td.FieldProjector.from_near_field_monitors(

sim_data=sim_data,

near_monitors=monitors_near, # only supply the non-downsampled surface monitors as sources

normal_dirs=["-", "+", "-", "+", "-", "+"],

pts_per_wavelength=10,

)

# do the same for the downsampled monitors

n2f_downsampled = td.FieldProjector.from_near_field_monitors(

sim_data=sim_data,

near_monitors=monitors_downsampled, # only supply the downsampled surface monitors as sources

normal_dirs=["-", "+", "-", "+", "-", "+"],

pts_per_wavelength=10,

)

# now, we retrieve the far fields in all three cases by passing in our far field monitor from before

start = time.time()

far_fields = n2f.project_fields(monitor_far)

end = time.time()

n2f_time = end - start

start = time.time()

far_fields_downsampled = n2f_downsampled.project_fields(monitor_far)

end = time.time()

n2f_downsampled_time = end - start

Performance comparison¶

We can see below that the local computation of far fields takes about the same time with and without downsampling, because the fields are anyway resampled on the near-field box prior to the computation. The real benefit of downsampling is reducing the amount of data that is stored and downloaded.

The server-side computation is extremely fast, as expected, and requires downloading no near-field data.

# use the simulation log to find the time taken for server-side computations

n2f_server_time = float(

sim_data.log.split("Field projection time (s): ", 1)[1].split("\n", 1)[0]

)

print(f"Local near-to-far: {n2f_time} s")

print(f"Local near-to-far with downsampling: {n2f_downsampled_time} s")

print(f"Server-side near-to-far: {n2f_server_time} s")

Local near-to-far: 1.1843602657318115 s Local near-to-far with downsampling: 1.1581153869628906 s Server-side near-to-far: 0.0826 s

Get Far Field Data for the Server-Side Computations¶

Recall that we also computed scattered far fields on the server; let's extract that data too.

far_fields_server = sim_data[monitor_far.name]

Compute the RCS¶

Now that we have the far fields computed in three different ways (locally, locally with downsampling, and remotely on the server), various far field quantities can be extracted.

For this example, we use FieldProjectionAngleData.radar_cross_section to get the RCS at the previously-specified theta,phi points.

# get the RCS for the local, local downsampled and server-side cases

RCS = np.real(far_fields.radar_cross_section.sel(f=f0).values)

RCS_downsampled = np.real(far_fields_downsampled.radar_cross_section.sel(f=f0).values)

RCS_server = np.real(far_fields_server.radar_cross_section.sel(f=f0).values)

Plot Results¶

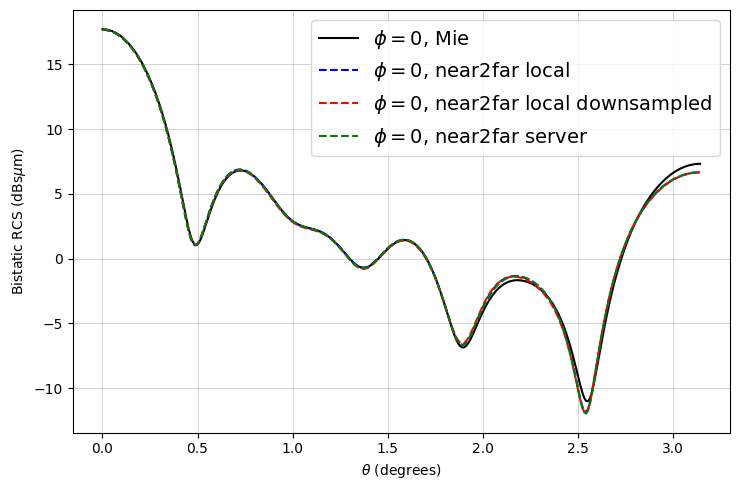

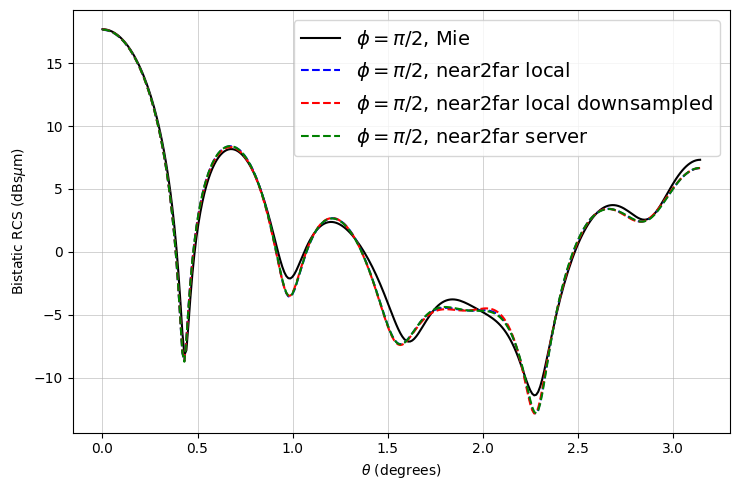

Now we can plot the RCS and compare it to the analytical RCS computed via the Mie series. The analytical results are stored in txt files which can be downloaded from our documentation repo.

The results match very well! As expected, there are minor deviations due to the FDTD discretization.

Notice that the downsampled monitors also yield fairly accurate results with less than an eighth of the data.

def to_db(val):

return 10.0 * np.log10(val)

RCS_phi0 = RCS[0, :, 0]

RCS_phi90 = RCS[0, :, 1]

RCS_downsampled_phi0 = RCS_downsampled[0, :, 0]

RCS_downsampled_phi90 = RCS_downsampled[0, :, 1]

RCS_server_phi0 = RCS_server[0, :, 0]

RCS_server_phi90 = RCS_server[0, :, 1]

# ------ import analytical data from disk ------

mie_file_id = "2lambda_epsr4"

mie_filename_phi0 = "./misc/mie_bRCS_phi0_" + mie_file_id + ".txt"

mie_filename_phi90 = "./misc/mie_bRCS_phi90_" + mie_file_id + ".txt"

mie_data_phi0 = np.loadtxt(mie_filename_phi0, delimiter="\t", skiprows=2)

mie_theta_phi0 = np.squeeze(mie_data_phi0[:, [0]])

mie_phi0 = np.squeeze(mie_data_phi0[:, [1]])

mie_data_phi90 = np.loadtxt(mie_filename_phi90, delimiter="\t", skiprows=2)

mie_theta_phi90 = np.squeeze(mie_data_phi90[:, [0]])

mie_phi90 = np.squeeze(mie_data_phi90[:, [1]])

# ------ plot for phi = 0 ------

fig, ax = plt.subplots(figsize=(7.5, 5))

ax.plot(mie_theta_phi0, to_db(mie_phi0), "-k", label="$\\phi = 0$, Mie")

ax.plot(thetas, to_db(RCS_phi0), "--b", label="$\\phi = 0$, near2far local")

ax.plot(

thetas,

to_db(RCS_downsampled_phi0),

"--r",

label="$\\phi = 0$, near2far local downsampled",

)

ax.plot(thetas, to_db(RCS_server_phi0), "--g", label="$\\phi = 0$, near2far server")

ax.set(

xlabel="$\\theta$ (degrees)",

ylabel="Bistatic RCS (dBs$\\mu$m)",

yscale="linear",

xscale="linear",

)

ax.grid(visible=True, which="both", axis="both", linewidth=0.4)

plt.legend(loc="best", prop={"size": 14})

plt.tight_layout()

# ------ plot for phi = pi/2 ------

fig, ax = plt.subplots(figsize=(7.5, 5))

ax.plot(mie_theta_phi90, to_db(mie_phi90), "-k", label="$\\phi = \\pi/2$, Mie")

ax.plot(thetas, to_db(RCS_phi90), "--b", label="$\\phi = \\pi/2$, near2far local")

ax.plot(

thetas,

to_db(RCS_downsampled_phi90),

"--r",

label="$\\phi = \\pi/2$, near2far local downsampled",

)

ax.plot(

thetas, to_db(RCS_server_phi90), "--g", label="$\\phi = \\pi/2$, near2far server"

)

ax.set(

xlabel="$\\theta$ (degrees)",

ylabel="Bistatic RCS (dBs$\\mu$m)",

yscale="linear",

xscale="linear",

)

ax.grid(visible=True, which="both", axis="both", linewidth=0.4)

plt.legend(loc="best", prop={"size": 14})

plt.tight_layout()